Chris Shinnimin

Final Fantasy Bot Project Journal

A fun personal project to learn LLMs and React, and rekindle my love of a favourite childhood game.

September 25, 2025

Prev | Project Journal Entries | Github | Next

Beta Version 0.1, Pausing the Project

I have come to a point just a few weeks after starting this fun learning project where I have achieved (at least to some degree) all of the target objectives of the bot app. My vision back on Sep. 2 was that the agent would be able to:

- Provide hints and tips based on the real time state and progress of the game

- Automate monotonous tasks

- Help a user cheat, just for fun

All of these objectives have been met in some degree. There is more fine tuning that can happen, but at this stage, I am going to wait until I begin my continuing education course with McGill next week. That course will teach me even more about prompt engineering, and using Langchain. I believe Langchain will be able to help me conquer my next challenge - eliminating the need for large initial training instructions to accompany every API call to the LLM. I'm going to focus on learning from McGill and circle back to this project once I have even more knowledge.

Streamline Initial Training Instructions

I did manage to reduce my initial training instructions from ~11,000 tokens to ~4,800 tokens by creating a useBestiaryRequest hook. This allows the LLM to look up information it needs about enemies in the game, rather than trying to train it extensively in the initial training message. But 4800 tokens is still far too many. Given that every API request needs to maintain conversation context, it means that literally every message we send to the API needs to contain this training message. And the way the application works, one message from the user with one response from FFBot may actually entail several back-and-forths to and from the LLM under the hood. So we quickly see how token usage scales... A conversation with FFBot with only 10 messages might contain 25-30 API calls to the bot, so 30 * 4800 = 144,000, and that doesn't count any of the tokens from the rest of the conversation. This idea is not scalable. I hope to use the knowledge I gain from McGill to remove my dependency on this initial training message idea.

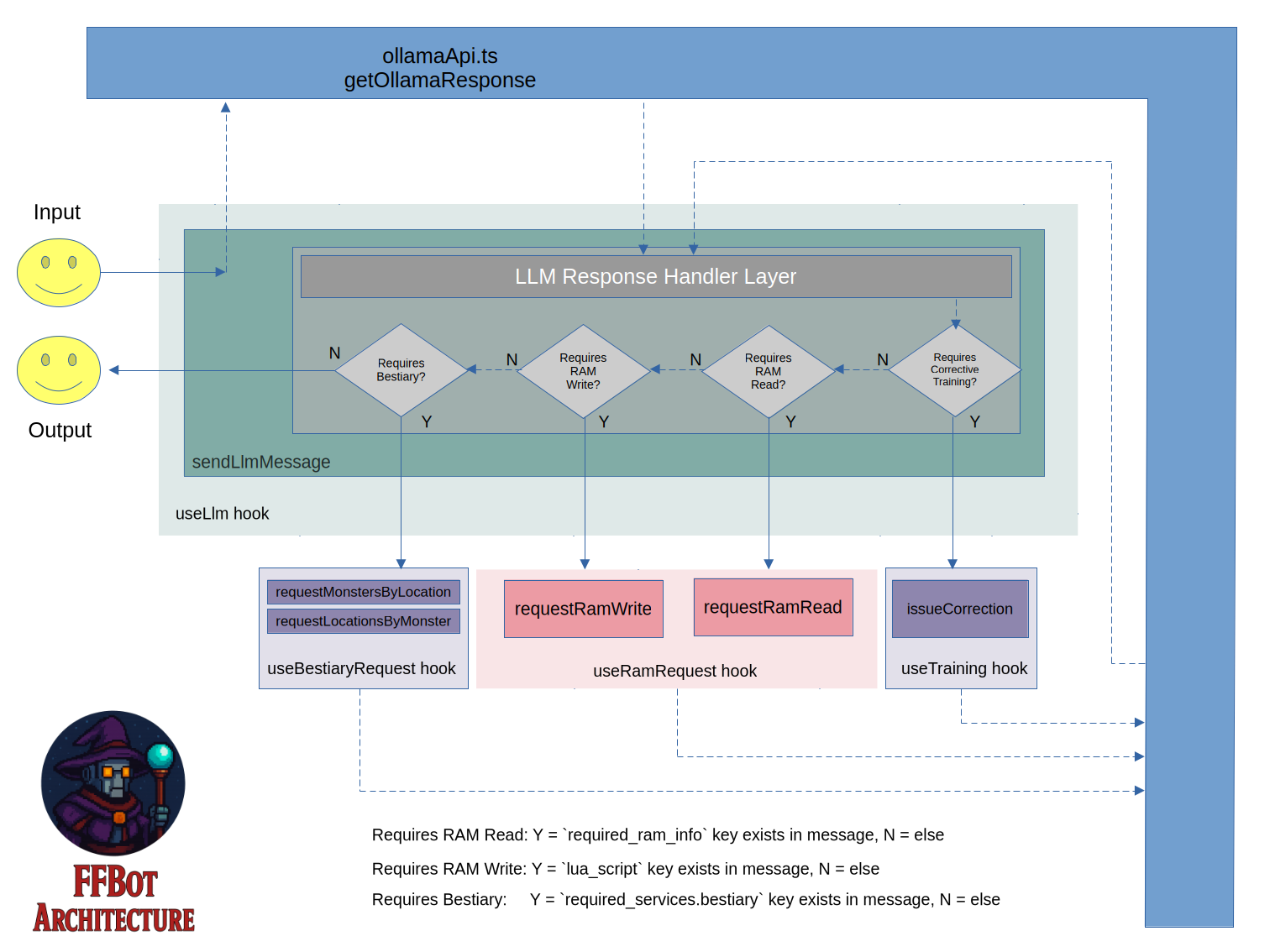

Refactor useLlm to Leverage Handler Pattern

Before shelving the project I needed to do something about my nagging discomfort with the cyclomatically complex code in the useLlm hook. In OOP I would rely on interfaces and abstract classes to reduce my compexity, but in the React paradigm, a handler pattern seemed like the alternative. I would value the opinion of a seasoned React developer in how they manage this kind of complexity.

Updated Architecture Document

Prev | Project Journal Entries | Github | Next