Chris Shinnimin

Final Fantasy Bot Project Journal

A fun personal project to learn LLMs and React, and rekindle my love of a favourite childhood game.

October 31, 2025

Prev | Project Journal Entries | Github | Next

Initial Langchain Results: Not What I Expected

Since my previous post I've used the time I've had over the past week or so to get an initial implementation of Langchain. It's very exciting to have my first success today. I have successfully:

- Split my instruction set to the LLM into multiple documents instead of one large document

- Chunked and embedded these multiple documents in to a vector database

- Defined my RAM read request API as a Langchain tool (annotated with @tool in Python)

- Through Langchain, successfully asked the LLM a simple question and got a correct answer via Langchain's process of retrieving only the relevant instructions from the vector database, using reasoning to determine which tool to use to get the information it needs, and formulating a response.

All of this felt like a great achievement, but when I added some logging to check my prompt token usage, I got a surprise. In my previous post I discussed my expectation that Langchain would help me reduce the application prompt token usage. I gathered some initial data, which I will publish in full in a future post once I am completely finished my Langchain implementation. The initial data suggested that, depending on the chosen model, prompt token usage was between 23,000 and 30,000 for a 3 message interaction. The code I have now only supports a single message interaction, but I was shocked to see my single message requiring between 8,000 - 14,000 prompt tokens.

My expectation had been that prompt token usage would have been limited to the tokens retrieved from the vector store. Remember that I had broken down the documents and validated that Langchain was only retrieving the instructions relevant to each prompt, which contrasts to the previous chat completion endpoint solution that passed the entire ~5000 token instruction set to the LLM with every message. So I was expecting token usage for a single message to be just 728, in line with what I validated as being retrieved from the vector database, but surprisingly 8,000 - 14,000 were used in reality. This made me realize something I had not considered: compared to direct usage of a chat completion API endpoint, Langchain may introduce significant token usage overhead 😱, at least at the smaller scales of my FFBot agent application. I'm still not sure whether this is due to the fact that something is wrong with my implementation or not. I am noticing that the Langchain AgentExecutor is requesting the RAM Read tool anywhere from four to eight times, which doesn't seem to make sense (it should only require one request).

Moving the NES API to Backend Python

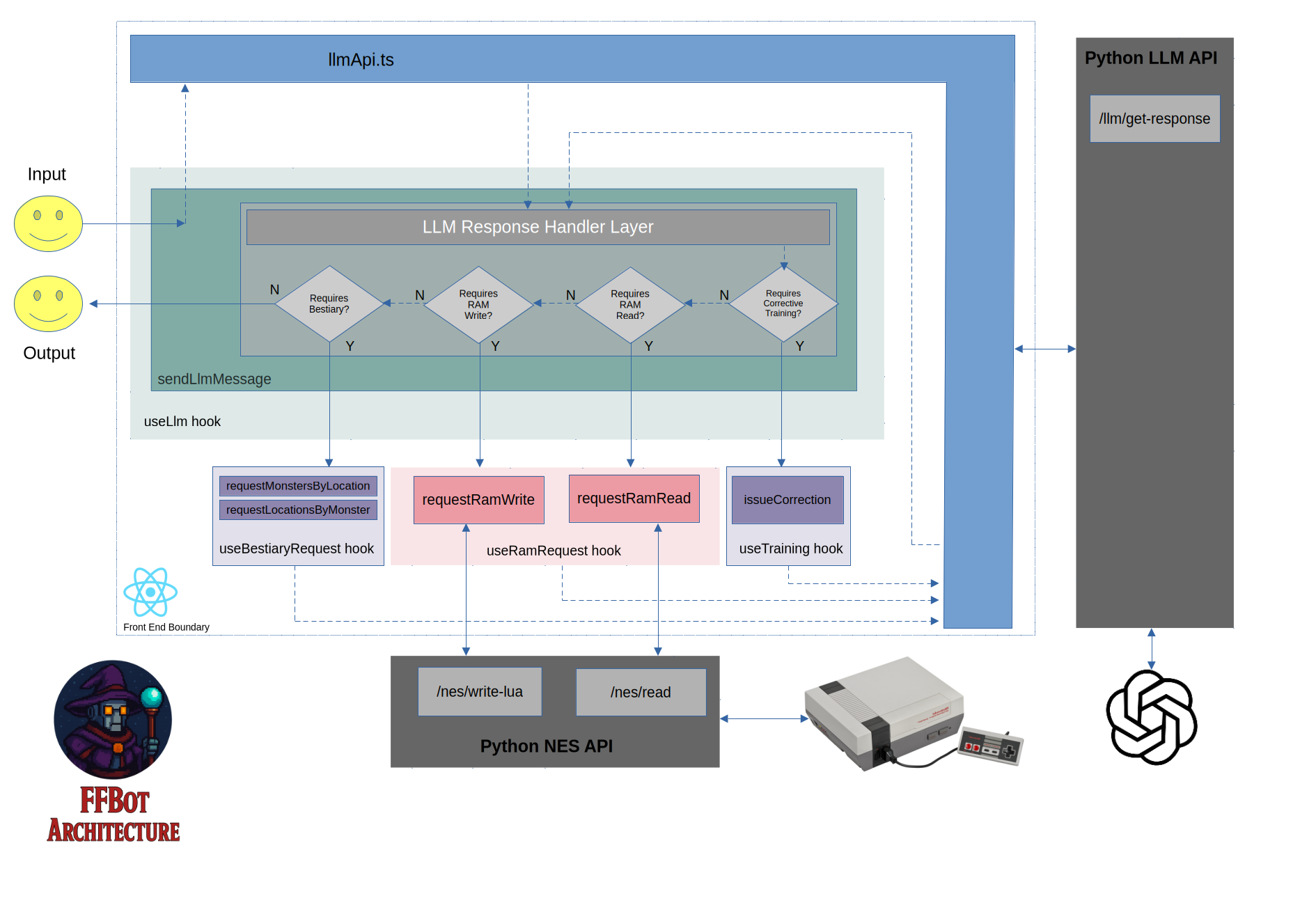

I also broke out the NES API into a back end API as I did with the LLM API previously. Here is an updated architecture diagram:

LLM Client Architecture and How Langchain Simplifies Things

Now the application will need to diverge a little bit into two distinct flows depending on which of two types of LLM Providers we are using:

- A Chat Completion Endpoint

- Langchain

In the case of a chat completion endpoint, the strategy is to pass a full, large set of instructions to a chat completion API endpoint with every LLM interaction. In this large set of instructions we have to explain in English, with examples, how the LLM is expected to make requests to the Python backend API. By contrast, the Langchain approach uses Langchain to split instructions into chunks and store them in a vector database and only retrieve the instructions we need for any given request, and the tools are provided using Langchain built in methods rather than explaining it to the LLM in English. Currently, Chat Completion Endpoints are supported for OpenAI, OpenRouter.ai and Ollama (local). FOr Langchain, only OpenAI is supported. Since future providers could be added, an OOP architecture for clients was created:

With the Langchain approach, all of the responsibilities of the "LLM Response Handler Layer" (see first image, high level architecture) are handled by Langchain rather than the front end React app.

Next Steps

Now that I've seen some initial success with Langchain, I will, in order:

- Clean up the implementation.

- Provide support for conversations (currently supports only single message).

- Continue to explore the capabilities of Langchain.

Prev | Project Journal Entries | Github | | Next