Chris Shinnimin

Final Fantasy Bot Project Journal

A fun personal project to learn LLMs and React, and rekindle my love of a favourite childhood game.

December 18, 2025

Prev | Project Journal Entries | Github | Next

Beta Version 0.2, Langchain/Chroma Success!

It has been over a month since I last posted on the project. Over that time I successfully completed the job search I began back at the start of September (looking VERY forward to starting at Vidyard in the role of Manager, Software Development in January 2026!) and almost completely repainted my house. So there has been a lot going on, but I finally made some time to get my Langchain implementation for this project into a stable state.

In my previous post I discussed how a discovery that Langchain introduces token overhead to complete its tasks may be foiling my attempt to reduce token usage. I now realize that initial thought was wrong and I do believe I have succeeded in the task of allowing my instruction set for the LLM to grow indefinitely while not adding significant token usage (token usage should remain roughly the same no matter how many instructions we add in the future). This and more is discussed in this post.

The New "Langchain Flow" (With Chroma!)

The application now has two different primary flows depending on how we set the LLM_PROVIDER environment variable. Previously, although we supported multiple providers (Ollama, OpenRouter or OpenAI), all providers used a direct HTTP request to the provider API endpoint. This was what we will call the Chat Completion Flow. Going forward, we also have the ability to interact with the LLMs through Langchain. Langchain is an established framework that assists developers in building robust agent applications and comes with a multitude of features from years of open source development. Whereas the Chat Completion Flow relied on our custom code to facilitate multi step reasoning and tool requests, in the new Langchain Flow we leverage this established library instead.

The new Langchain Flow also incorporates three Chroma vector databases. Although Chroma is completely separate from Langchain (no dependency of either on the other), the addition of the Chroma databases is a key element of what I am now calling the Langchain Flow, specifically because it is what allows the instruction sets to grow indefinitely while keeping token usage steady. As mentioned previously, Langchain itself gives us new capabilities in building out the agentic functionality - for example, simplifying the process of creating new tools for the agent - but it is Chroma that truly solved the token growth problem.

Chroma solves the problem by allowing us to perform a similarity search against the contents of a database and only retrieve the most relevant chunks in the database. So we can store our instructions to the LLM in these Chroma databases, retrieve only the instructions relevant to specific requests, and provide those to the LLM through Langchain. Pretty nifty.

More on the Three Chroma Databases

We now have three separate vector databases that are searched before invoking Langchain for any given message from the user. One contains chunks that are full "instructions", which are larger, complete markdown documents that explain how to perform specific tasks. In the instruction database, each complete markdown becomes its own chunk. The second database contains "hints", which are stored as a single markdown file in the repository, but are chunked such that each line in the file becomes its own chunk - essentially making the hints smaller bits of information (with lower token usage) than instructions. The final one contains "addresses", that is, memory addresses that might be relevant for a given request. The memory address markdown file is also chunked so that each address becomes its own chunk. We have the ability, for each database, to toggle the similarity and k values, meaning we can individually toggle them for how similar we want hits to be in order to return chunks, and set how many chunks to return at maximum. This design gives us a dynamic way to control the maximum number of input tokens that will ultimately form the messages to the LLM (allowing us to scale the total instruction set indefinitely without increasing token usage), while also providing all the relevant information required for any given request.

Show Me Some Data!

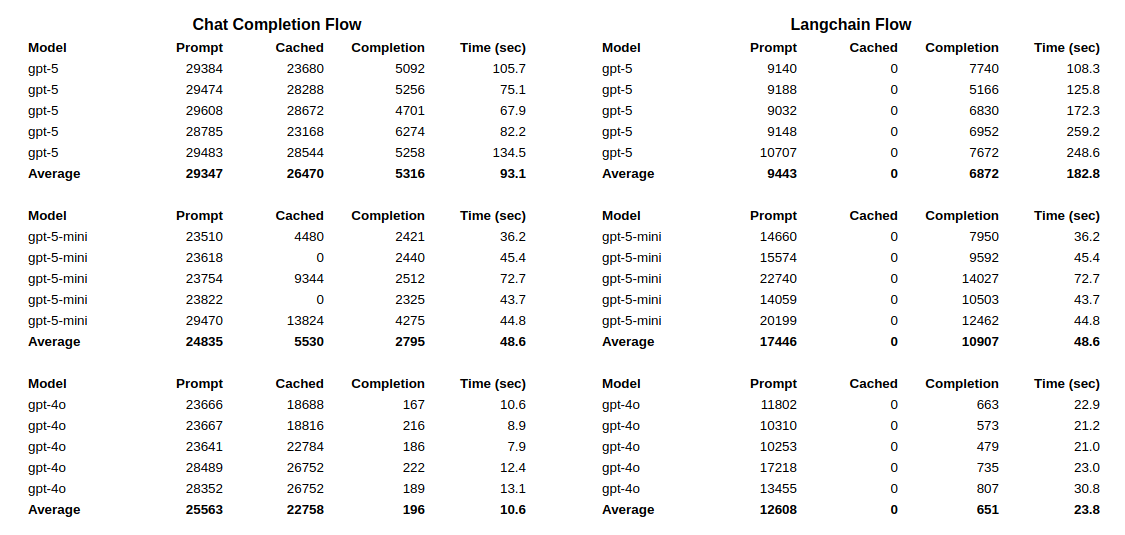

I did perform some analysis on the before and after using the old Chat Completion Flow (which sends the entire instruction set to the LLM for each message) vs. the new Langchain Flow (which retrieves the relevant information for each request only), and although it shows modest improvements for the Langchain Flow, I believe the results would become more and more pronounced as the instruction set grows. Testing was performed with three separate models. Each model was tested five times. In each of the tests, we started a new conversation (which requires token usage in the Chat Completion Flow but not the Langchain Flow) and issued 3 specific questions and commands:

- "Where am I"?

- "What should I do next"?

- "Give me the mystic key".

Here are the raw results:

Here are some thoughts about the data:

- Overall prompt token usage is down for all models. While this is seems good on the surface, notice that when using Langchain, tokens never seem to be cached. Most models charge only about 1/10 for cached token usage. Since for gpt-5 and gpt-4o most of the tokens seem to get cached, that would be worth factoring in to projected costs if this were a serious business project.

- Overall time for messages to be answered in the UI nearly doubles for gpt-5 and gpt-4o with the Langchain Flow. I noticed this is mostly due to the Chroma database similarity searches. So it seems the familiar architectural concept of tradeoff rears its head again. If we want to use Chroma to keep our token usage in check, it will come at the cost of time.

- Completion token usage seemed to quadruple for both gpt-5-mini and gpt-4o in the Langchain Flow. This means that the final responses of the LLM were much more verbose for some reason. I am not sure if that was related to Langchain somehow, or if I possibly made a mistake and ran the tests with different temperature settings.

- The gpt-5 model is basically unusable in either flow due to long wait times.

My final and most important thought about this data is that I expect that as the total size of the instructions grows, the difference in prompt token usage between the two flows will continue to become more exaggerated. The gpt-5 model cut it's usage by around 1/3 and the gpt-4o model cut it by roughly 1/2. Let's focus on the gpt-4o model. The full instruction set of the Chat Completion Flow is about 4700 tokens. Notice that the final two runs of the Chat Completion Flow tests for gpt-4o have roughly that may more tokens used. That is because in the first 3 tests, the bot-app used 5 messages to get to its final answer, while in the last two it used 6. Let's assume for this thought experiment that it always used 5, and that the average token usage was therefore 23,500 (4700 x 5). The way the old Chat Completion Flow works, if we needed to add more instructions for the bot app for different questions or problems it might face, this number would grow even though the new instructions aren't relevant to the questions of this particular test. So if the total instruction set grew to 20,000 tokens, we would likely see 100,000 tokens used in this test (20,000 x 5). However, I would expect that the token usage in the new Langchain Flow would remain at around 12,000-13,000 where the current tests indicate. It would be great to validate this hypothesis later once I have added a significant amount of additional instructions.

Quick and Effective Tool Creation

One of the other benefits I learned Langchain can provide us is quick and easy tool creation. Back when I released Beta Version 0.1, I mentioned in the video accompanying the blog post that the one problem I hadn't solved yet was how to automate the monotonous task of reordering my party members after they get poisoned (the game does this automatically whether you like it or not, and you always have to manually reorder them afterwards). I had initially assumed that all of the data about each character would be stored in the same memory locations forever, and that there was one simple memory address that stored the slot in the order for each character that could easily be toggled. It turns out, the character order is NOT stored as a variable. It is implied by the memory locations of the data. Each character has 64 bytes of data stored about them, including their names, hit point levels, status levels, etc. When you change the order of a character in the game, it literally swaps all 64 bytes to a new location in memory. Explaining to the LLM how to do this proved to be a fools errand. Enter Langchain. In under 30 minutes, using Github Copilot I was able to create a Python API call that could perform the swap, create a tool wrapper for langchain, and create very simple English instructions for the bot to just call the tool when it needs to do the reordering. Problem solved quickly and easily. Thanks Langchain.

What's Next?

The project will likely go on another pause now. We are entering the holiday season and immediately afterwards I will be starting my new job, so my focus will be there considerably. I hope to some day continue making improvements to this bot though. It has been incredibly fun to learn these new technologies and solve these problems, especially doing so with this really fun, playful use case that reminds me of the childhood inspirations that led me to a career in software development in the first place.

Prev | Project Journal Entries | Github | Next

Demo of Beta Version 0.2

COMING SOON!